Prepared for the Unexpected

Most AI learns from failure. CVEDIA trains for scenarios before they happen, using synthetic data to simulate the rare, the difficult, and the conditions your cameras will actually face.

Real-World Data Has Real-World Limits

Traditional AI training relies on capturing thousands of hours of real footage, labeling it by hand, and hoping your dataset includes the edge cases that matter. It rarely does.

You can't wait for fog to roll in. You can't stage a break-in at night. You can't capture every lighting condition, every camera angle, every unusual behavior. And by the time you encounter a failure in production, it's already too late.

CVEDIA takes a different approach.

Your Deployment Environment Isn't in the Training Set

Models trained on popular datasets perform well on similar data. But security cameras, traffic systems, and defense sensors operate in conditions those datasets never captured.

Real-World Camera Conditions

Aging infrastructure with low resolution and compression artifacts. Environmental artifacts like spider webs, lens dirt, and water droplets. Motion blur from camera shake and vehicle-mounted instability. Lens distortion and fisheye aberrations that warp expected object shapes.

Dataset Bias Issues

Position bias with objects clustered in frame center. Size distribution skewed toward medium objects while small targets underperform. Daytime bias in training data leaving night and IR scenarios as edge cases. Annotation inconsistency from human labelers introducing variance.

What the Data Reveals

Score Amplitude

High variance between best and worst cross-domain scores across all real datasets. KITTI and Cityscapes showed the largest performance deltas.

Annotation Quality

Datasets with inconsistent or erroneous annotations showed impaired convergence and underperformed even relative to baseline metrics.

Feature Overfitting

Significant degradation under cross-domain evaluation reveals dataset-specific feature overfitting. Models learn the camera, not the object.

Controllable Data Generation at Scale

Bias Mitigation by Design

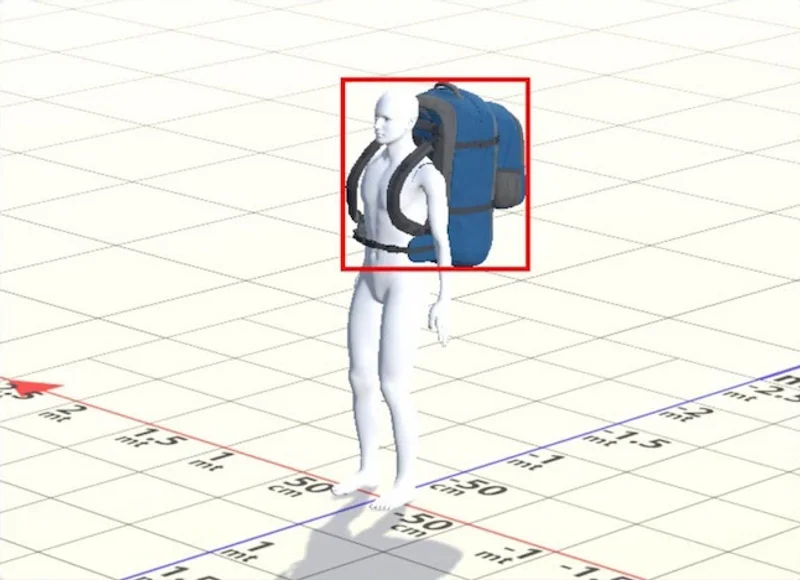

Systematically control POV distribution, lighting conditions, object placement, and occlusion levels. Eliminate the hidden biases that cause domain shift failures.

Edge Case Injection

Generate rare scenarios on demand: fog, snow, glare, unusual angles, partial occlusion. Train for conditions your real-world dataset would never include.

Pixel-Perfect Annotation

Zero annotation error. Every bounding box, segmentation mask, and keypoint is mathematically precise, no human labeling variance.

Privacy-Safe Training

Train on synthetic scenes, not real people. Deploy in defense, healthcare, and critical infrastructure without exposing surveillance data.

Read the Full Research

Explore our technical deep-dive on synthetic data and model generalization.